GitHub repository: [link]

Over the past month, I’ve been tinkering with Android Studio and tried to seamlessly integrate it with yarp in particular (and the iCub software architecture in general). I really believe that a robot can dramatically benefit from the number of sensors a smartphone is provided with. And this can be dual: better HRI, better feedback from the robot, and so on.

To this end, I worked (along with mainly Francesco Romano for some low-level Java support) on the cross-compilation of yarp on ARM-v7, and the implementation of a working JNI interface to switch back and forth from the Java layer and the C++ one. As a result, I developed an Android application (aptly named yarpdroid), which provides a template for some basic functionalities I worked on, as well as a couple of already available use-cases. The code is available at https://github.com/alecive/yarpdroid.

A quick tutorial on how to cross-compile YARP is provided here.

Features

YARPdroid application

- Nice Material Design interface (because why not)

- Google Glass interface for a couple of amazing things I will talk about later on

JNI Interface

The JNI interface has been a real mess. It required the cross-compilation of the YARP core libraries (for now, YARP_OS, YARP_init, and YARP_sig), which was no small feat. I’ll upload any of the steps required to do this from scratch, but right now there are pre-compiled libraries in the GitHub repository in order to ease the process. What I managed to do was:

- Sending data (of any kind) from the smartphone to the YARP network

- Receiving data (of any kind) from the smartphone to the YARP network

- Sending images from the YARP network to the smartphone (e.g. iCub’s cameras)

The only thing that is missing in order to provide a complete template application is sending images from the smartphone to the YARP network. It is a not easy task, but we’re working on it :

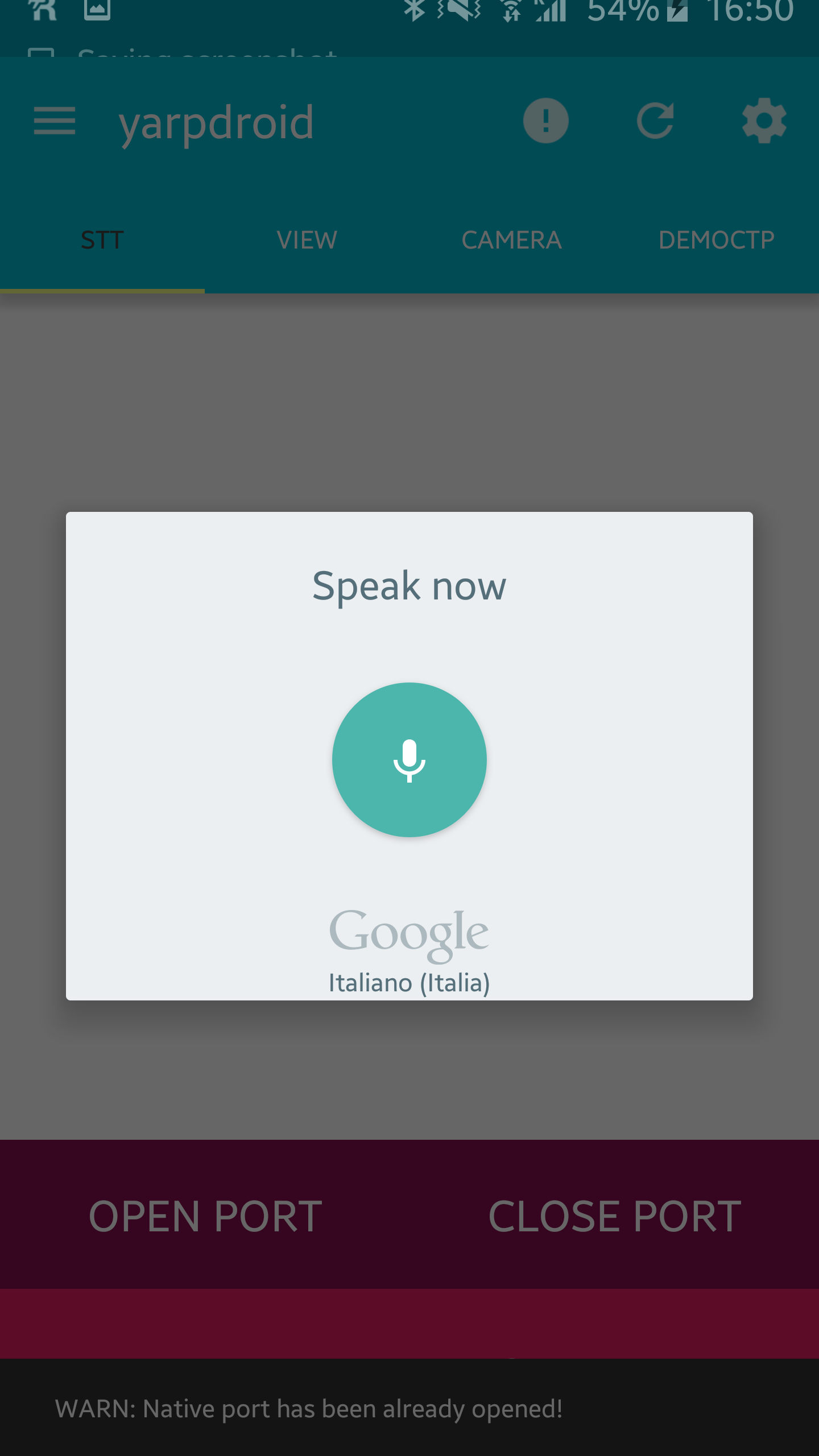

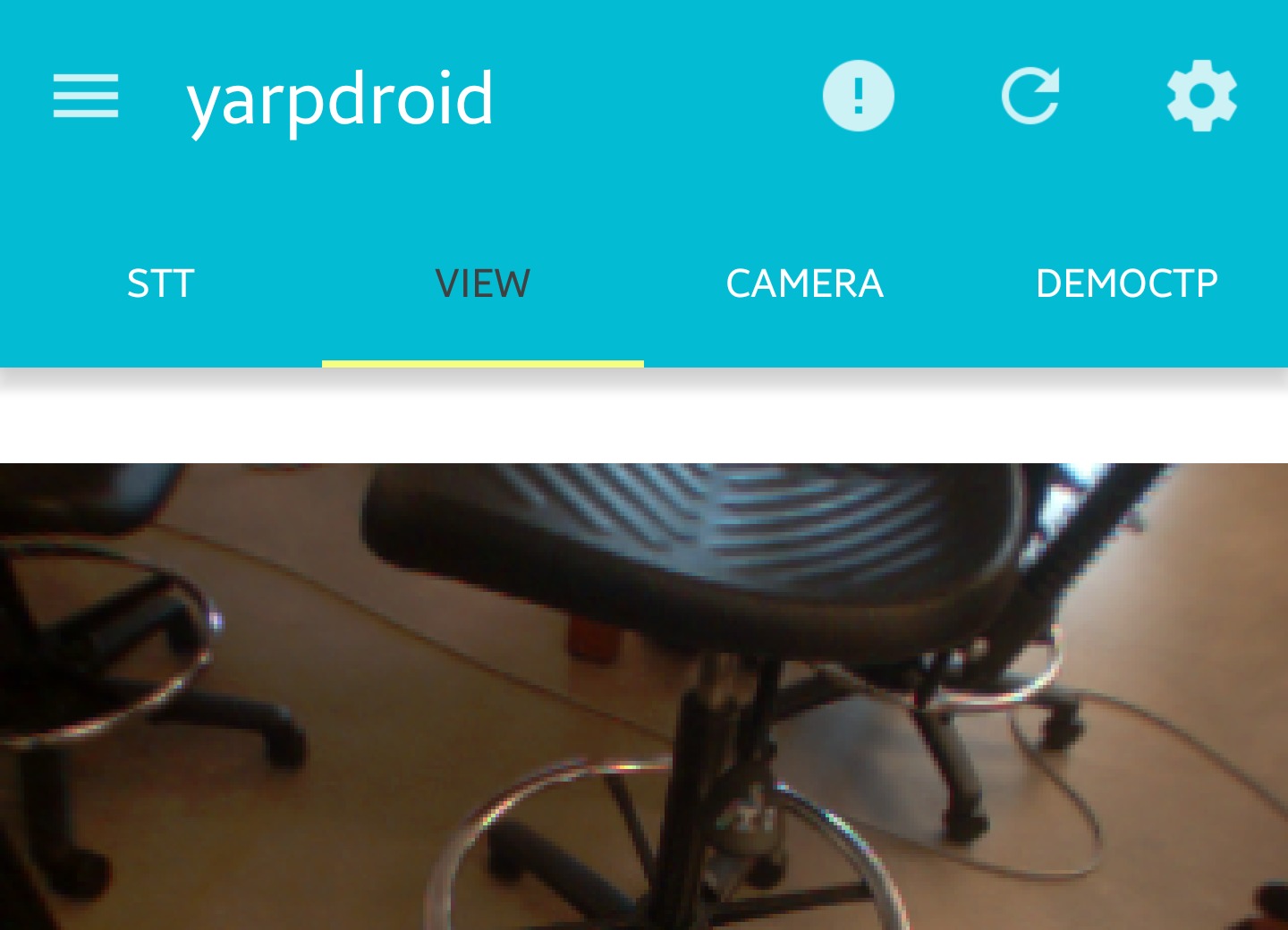

Screenshots

Videos

Video #1

In this video, I show what happens if you empower users with a natural human-robot interface. The user can see what the robot sees, and it can direct its gazing by simply clicking on points into the image. Pretty neat!

Video #2

This video showcases an interface between the iCub and the Google Glass through the yarpdroid application. The gyroscope on the Google Glass is used to remotely control the gaze of the iCub robot. The left camera of the robot is then sent onto the Google Glass. Albeit it’s a quick and dirty implementation (the output of the gyroscope should greatly benefit from a Kalman filter), this video demonstrate how an user can seamlessly teleoperate the head of the robot with little to none additional machinery needed. I can also go faster than that, but unfortunately I do not have a video for that!

This project has been carried out during MBL 2015 (Woods Hole, MA), as a result of a collaboration with Shue Ching Chia, from the Knowledge-Assisted Vision Lab in the A-Star Institute for Infocomm Research.

Video #3

In this super-lowres video, android speech recognition is integrated onto the iCub platform in order to provide a natural interface with the human user. Sorry for the quality!